Abstract

This whitepaper explores the innovative CUBE (Customized Ultra-Bandwidth Elements) technology developed by Winbond Electronics Corporation, designed to revolutionize AI computing on edge platforms. CUBE addresses the increasing demand for AI applications by providing a highbandwidth, power-efficient, compact, and cost-effective memory solution, as a ready-to-deploy technology available to module makers and System-on-Chip producers. This paper explains the purpose of CUBE, the end-user demands it addresses, its customer profile, and how it overcomes existing market shortcomings.

Introduction

Purpose of CUBE Technology

Winbond Electronics Corporation has created CUBE technology to deliver the massive increase in memory-interface bandwidth needed for systems to service the rapidly escalating demands for AI applications on edge computing platforms. CUBE enables remarkable power efficiency, exceptional performance, compact size, and cost-effectiveness, delivering a significant boost to system capabilities, response times, and energy efficiency.

End-user Demand

CUBE is implemented in the devices that support consumer, industry, finance, healthcare, government, and other activities. Recently, generative AI has arrived and has been adopted enthusiastically across all sectors. Characterized by complex architecture and large model size, generative AI places heavy demand on system resources. The models consist of numerous parameters that require significant memory bandwidth for quick data access during. Generating responses and creating content demands a large volume of computations, straining the processing resources, which can impact overall system performance. Additionally, the need to store and manipulate extensive model weights contributes to high memory usage.

More generally, a wide variety of AI applications leverage the expressive power of large models to handle intricate patterns and relationships within their respective domains, which drives up computational demands and resource requirements. These include computer vision applications, which use convolutional neural networks (CNNs) for image recognition and often employ large models to learn intricate patterns and features in visual data.

Other applications include natural language processing, which uses AI models for tasks such as sentiment analysis, and speech recognition that uses deep neural networks with substantial parameters to enhance accuracy and understand complex speech patterns. There are also reinforcement learning applications, which may use neural networks to represent complex policies or value functions, and recommendation systems such as those employed by streaming services or e-commerce platforms. These often have large architectures to capture and analyses user preferences.

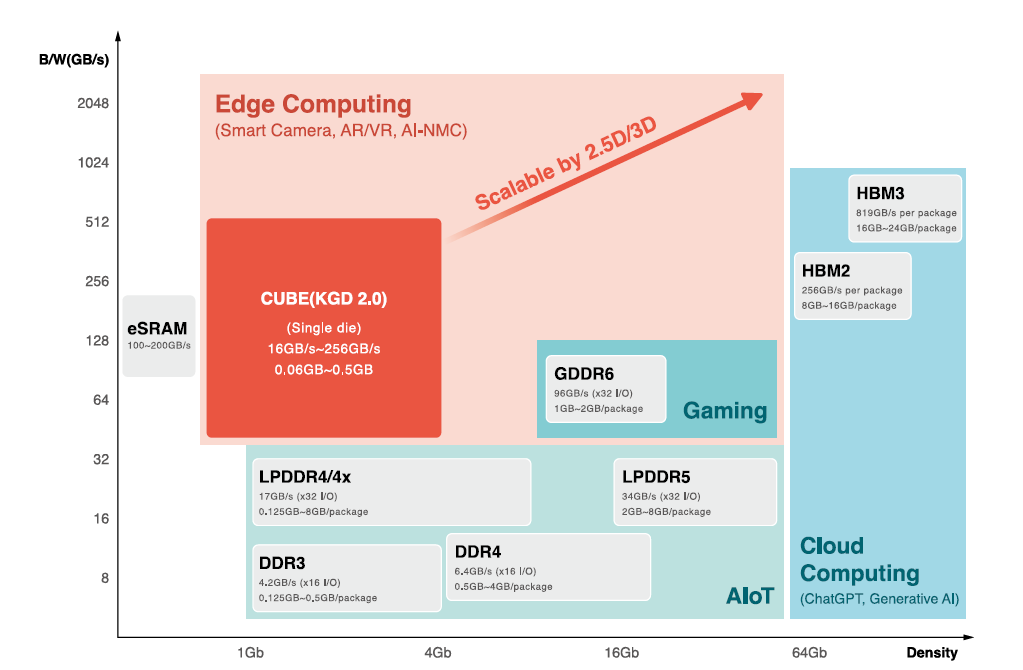

Figure 1. Scalable CUBE for Edge Computing

CUBE Customer Profile

CUBE is applicable to chip makers, module makers, and system builders, making memory products and equipment intended for edge deployments. The technology is providing high bandwidth, power efficient, and customizable.

Limitations of Existing Technologies

Current Market Issues

To address the needs of systems hosting AI applications, memory chips and modules must offer suitable performance across several parameters: sheer bandwidth being only one. Others include power efficiency, size, and thermal management, and are applicable in edge scenarios.

Existing memory solutions face limitations in memory bandwidth, impacting system performance. Physical characteristics such as the number of IC pins, data-transfer rate, and the width of the memory bus, play a significant role in determining the interface bandwidth.

Memory chip and module designers must handle design and manufacturing-process constraints that can contribute towards restricting the bandwidth that can be provided. Also, at higher speeds, signal integrity becomes a critical concern as factors like attenuation, crosstalk, and reflections can limit the achievable bandwidth.

On the other hand, increasing the bandwidth can come at the expense of increased power consumption or reduced efficiency. This can bring unwanted thermal-management challenges, as well as compromising the operation of battery-powered edge devices. In addition, certain solutions contribute to larger form factors, limiting suitability for compact devices.

Future Challenges

The constraints on bandwidth, energy demand, and form factor, which face all memory technologies, are likely to become more noticeable and problematic in the future with the increasing user reliance on AI applications and, in particular, those that depend on large models. The needs of this emerging and fast-growing category of workloads will necessitate more powerful and energy-efficient computing capabilities.

CUBE as the Solution

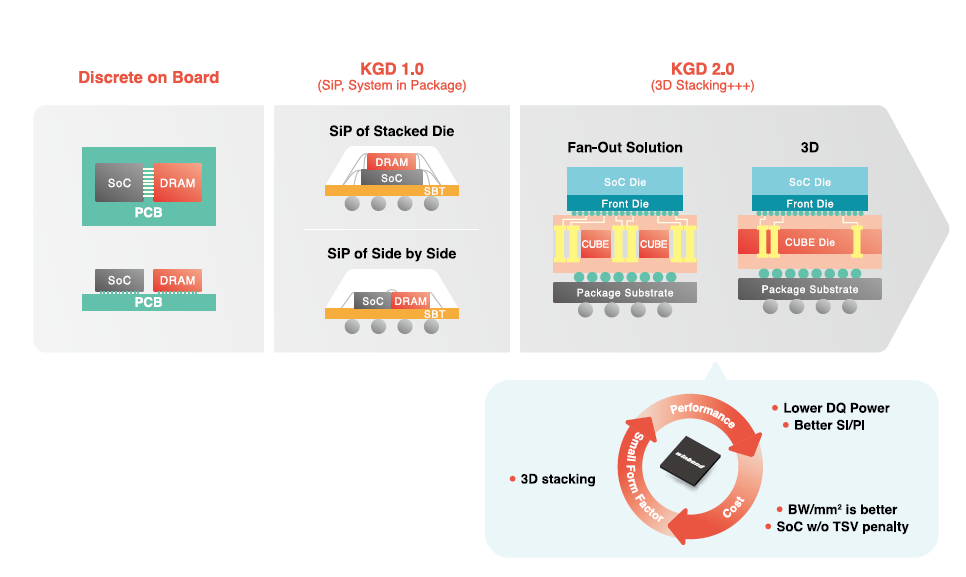

CUBE addresses the shortcomings of conventional memory IC and module solutions through novel approaches to increasing I/O count and raising data speed, support for Through-Silicon Via (TSV) technology as an option, and its 3D architecture that reduces thermal dissipation issues.

As an innovative, patented high-bandwidth memory interface technology, CUBE enables memory modules to be optimised for seamless performance when running AIs that use large model sizes, whose demands outstrip the bandwidth available with conventional memory modules. While enhancing bandwidth, CUBE also reduces power consumption.

CUBE enhances the performance of front-end 3D structures such as chip on wafer (CoW) and wafer on wafer (WoW), as well as back-end 2.5D/3D chip on Si-interposer on substrate and Fan-out solutions. On the other hand, CUBE is easily adopted in new product designs.

While CUBE suitable for use with power-conscious high-bandwidth edge/endpoint AI devices that combine the strengths of cloud and edge engines. These are expected to be the next phase in making advanced AI applications more accessible, overcoming security and cost issues.

Suitable for power-conscious design, CUBE enables seamless and efficient deployment of AI models across different platforms and use cases, including edge devices, surveillance.

Figure 2: KGD Evalution

CUBE in Detail

CUBE excels by providing high bandwidth memory solutions that address the limitations found in current offerings, thereby enhancing overall system performance.

Also, CUBE benefits from outstanding power efficiency, consuming less than 1pJ/bit. This makes it particularly well-suited for energy-sensitive applications, outperforming less power-efficient alternatives.

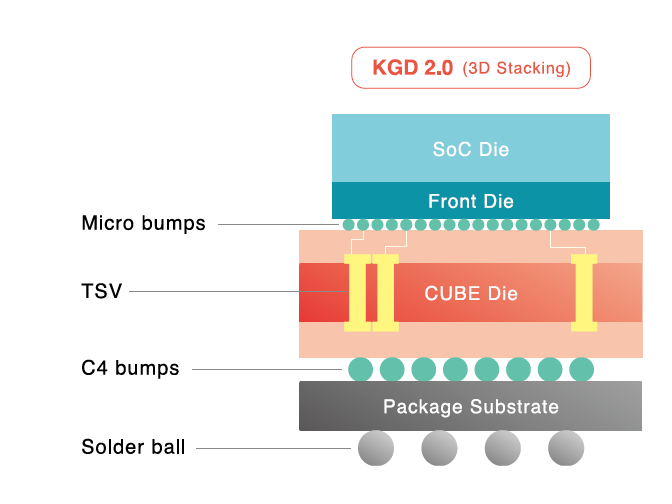

Moreover, the compact form factor, achieved through 3D stacking options and a small size, makes CUBE an ideal choice for portable and space-constrained devices. The innovative 3D architecture of CUBE strategically places the System on Chip (SoC) on the top die close to the heatsink, effectively mitigating heat dissipation concerns associated with AI computing.

Another key feature is CUBE's flexibility in design, allowing for customization to meet the specific requirements of various applications and so provide tailored solutions for customers. The integration of Through-Silicon Vias (TSVs) in CUBE contributes to improved power delivery, signal integrity, and overall system efficiency.

CUBE's flexible design allows customization of die area, optimized according to specific customer SoC specifications.

Figure 3: CUBE 3D stacking

Memory Densities and Key Features

CUBE can be designed from 1-8Gb/die based on the D20 process, or 16Gb/die on D16. Both non-TSV and TSV stacking are available, giving flexibility to optimize memory bandwidth for various applications.

CUBE delivers exceptional power efficiency, consuming less than 1pJ/bit in D20 process, ensuring extended operation and optimized energy usage.

CUBE's I/O interface supports a data rate of 2Gbps with 1K I/O, providing total bandwidth ranging from 16GB/s to 256GB/s per die. In this way, CUBE ensures accelerated performance that exceeds industry standards and enhances power and signal integrity through uBump or Hybrid bonding.

Offering from 1-8Gb/die based on the D20 specification, and with flexible design and 3D stacking options, CUBE accommodates smaller form factors. The introduction of through-silicon vias (TSVs) further enhances performance, improving signal integrity, power integrity, and heat dissipation.

Moreover, TSV technology – as well as uBump/Hybrid bonding - reduces power consumption and save SoC design area, enabling a cost-effective and affordable solution. Efficient 3D stacking, leveraging TSVs, eases integration with advanced packaging technologies. By enabling reduced die size, CUBE drives device cost reductions, greater energy efficiency through shorter power paths, as well as more compact and lightweight designs.

Access to CUBE Technology

Winbond actively engages with partners, including IP design houses, foundries, and OSATs, fostering collaboration and comprehensive ecosystem development.

Conclusion

CUBE's high bandwidth, low power consumption, and advanced technology facilitate efficient data transfer, contributing to the integration of edge AI.

By supporting increased bandwidth and energy efficiency, improved response times, and support for customization and compact die sizes, CUBE is pivotal in unleashing the full potential of AI applications. It is suitable for facilitates the migration of powerful AI into edge devices, as well as in hybrid edge/cloud use cases.

Applications for CUBE technology include edge computing and consumer electronics.

CUBE represents a paradigm shift in Edge AI computing, offering a comprehensive solution to the evolving demands of AI applications. With its focus on power efficiency, high performance, and flexible design, CUBE is ready to shape the future of AI-driven technologies.

For More Information

For more information on Winbond products, applications, or services, please contact us via Technical Support.

English

English